JLL 2022 - Team 1

DAY 1

Objective: The single objective for JLL2022 is to INTEGRATE software and hardware components and DEMONSTRATE operation of the MANUFACTURING DIGITAL TWIN system, e.g., AVATAR ROBOTIC WORK-CELL DIGITAL TWIN.

The first day of AVATAR week started with a presentation of the University and their relative course, projects, and opportunities that it offers. Moreover, an introduction about the topics that we will do were given during the sessions, starting from the creation of the model to the digital twin implementation. We discovered all the features that the project exploited and the main advantages that will bring us in terms of knowledge and work opportunities for future works. This project will give us a powerful tool to improve the manufacturing lines in the context of Industry 4.0, exploiting the virtual reality for evaluating spaces and operating conditions.

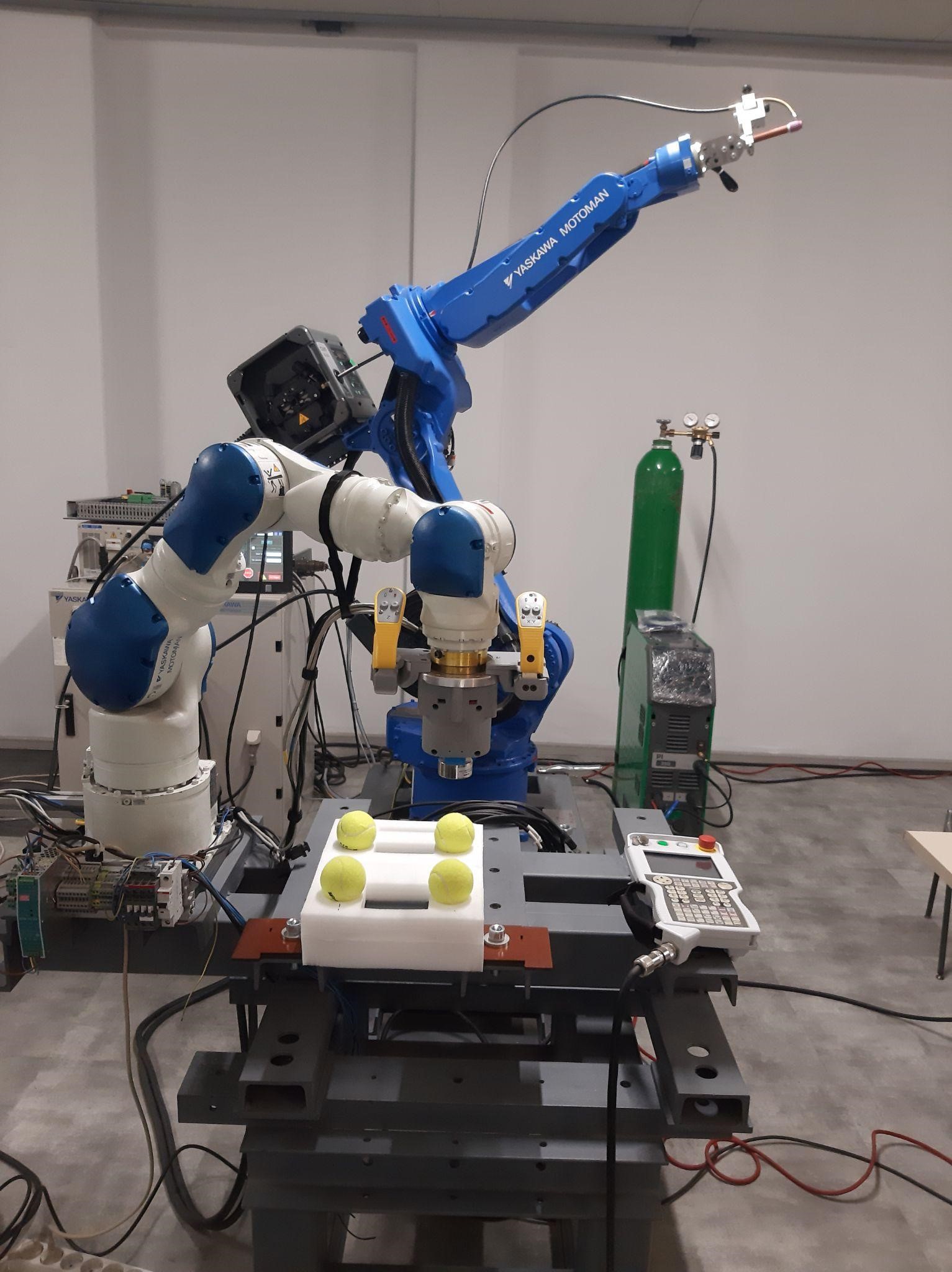

In the afternoon session of the first day, we started with the first working session, based on the CAD modeling of the real physical system to be implemented in the virtual reality simulation later. The first step was to check the measurement of the real system to be sure that the CAD model will be coherent with the digital one. And for that reason, according to the measurement references of the robot “SIA 10F”, we went on solidworks and we changed all the measurements of the CAD imported from the official site “Yaskawa”, brand owner of the robot. In that moment another important step was necessary, the reference coordinate system must be set up on each link in the right way in order to be sure that the future simulation implemented in Unity will work. Since the objective of the first working session was to create the scene in a Virtual context, we exported the cad model in the GLTF format, so that was possible, through an unity importing package, to bring the CAD model in the house of the simulation. At the end of the day, after generating the robot and the simulation scene, we went on with the creation of some scripts to start controlling the robot. Very basic scripts in C# were implemented to start moving simple objects.

DAY 2

Morning session

Objective: XRSCENE ANIMATION - RWCKinematics Modeling

During this session, we will be introducing RoboDK, a software that helps us solve direct and inverse kinematic problems for robots and be able to exploit it inside unity.

Important steps for being able to repeat the protocol:

- Introduction to RoboDK : Software that helps us to solve direct and inverse kinematic problems ,

- Importing the “Motoman CSDA10F” Robot Model into RoboDK,

- Modify the reference systems on RoboDK, to make them consistent with unity references,

- Add a new reference on the TCP, to add 20 mm that were missing in RoboDK model compared to real robot in the lab,

- Create a code that will help us generate the movement,

- Define some points to be our targets (target points),

- Define also the type of the movement that the robot will make between targets, movJ= Joints movements, movC = Circular movement, movL = Linear movement.

- Experiment with direct and inverse kinematics, with Run and Loop command.

- On the Unity side, we learn the logic behind the parent-child relation.

Lab visit

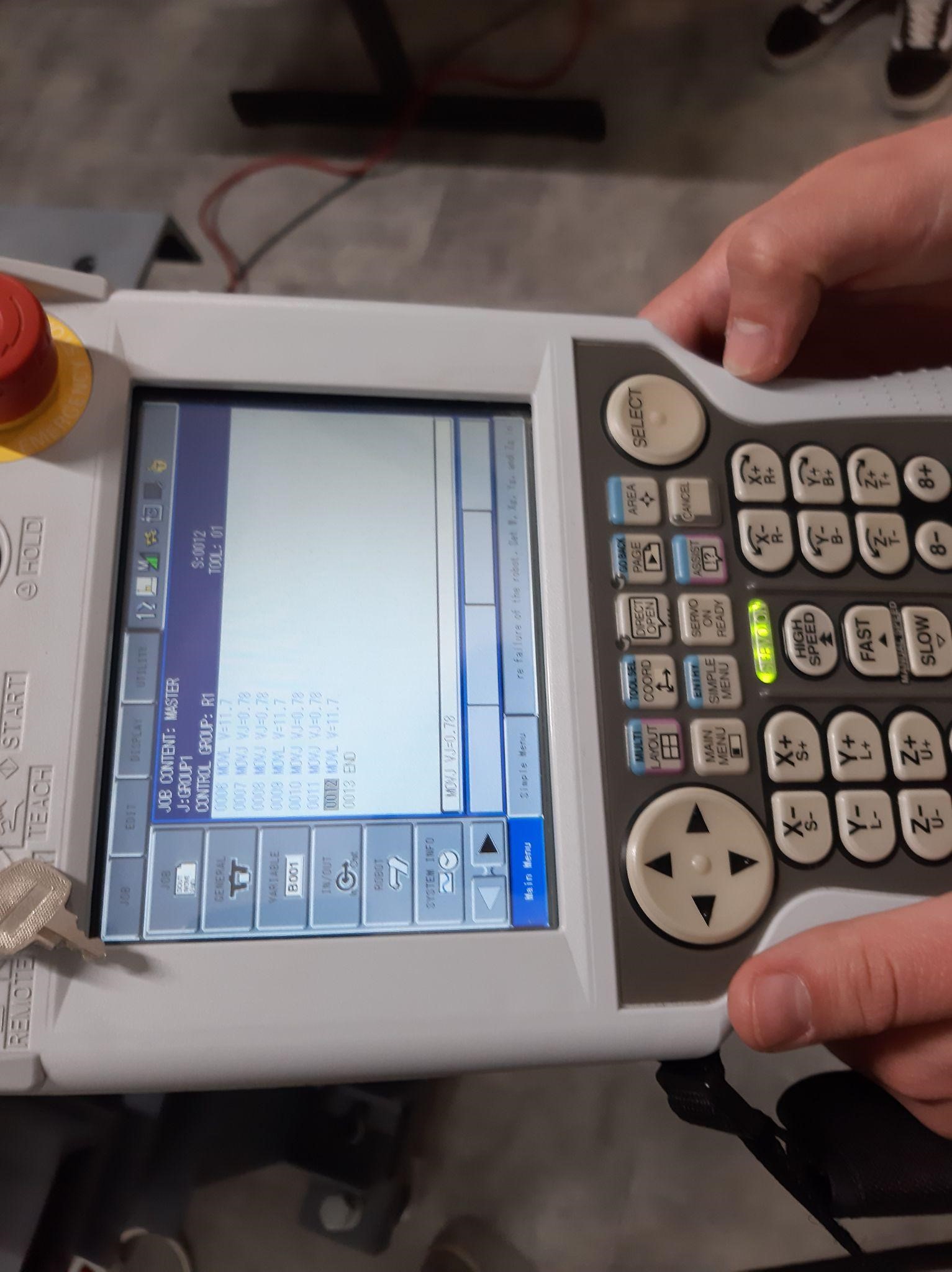

We went to the lab and we experimented with robot programming by teaching, in particular we got used to the logic behind the teach pendant. We were able to move the robot following the logics of both direct and inverse kinematics. We get knowledge about safety issues and the risk of programming and interacting with a real robot.

Afternoon session

Objective: XRSCENE ANIMATION - RWCKinematics Modeling

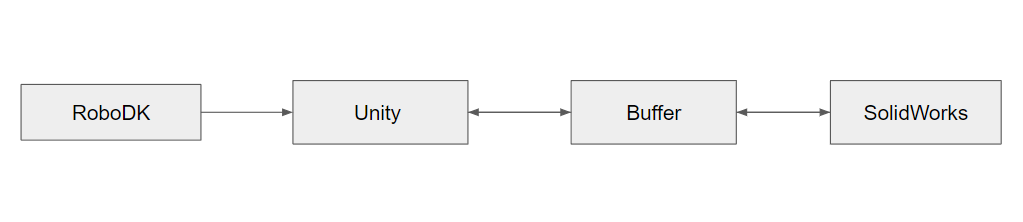

The main objective of this program is to analyze the API of RoboDK, Unity and SolidWorks and understand how to implement the communication between the three softwares using the User Datagram Protocol (UDP).

The main characteristics of the communication layer are the following:

- A main code with an algorithm for obtaining new data (angles) which is triggered only when a new message is sent into the buffer, but also sending data (angles) when changes are made in one of the softwares (Unity or SW)

- Another code which is called by the main code, with the purpose of converting angles, because of the problem of having different coordinate systems among the softwares.

- Another two codes which are called by the main codes, which have the aim to convert the message to UDP protocol convention. As an example, here is the code from Unity that is used for communication with SolidWorks. Below is the main algorithm, which is called in every frame when Unity is started, because it is in the void Update() method. The same code with little addings is used in Visual Studio for SolidWorks communication with Unity.

private void SolidWorks_DT()

{

if (UDP_client_SW.canRecieve() > 0)

{

try

{

uglovi = UDP_parser_SW.parsiraj(UDP_client_SW.udpRead());

}

catch

{

Array.Copy(startuglovi, 0, uglovi, 0, uglovi.Length);

}

Set_parametcrs(uglovi);

Get_parameters();

Array.Copy(uglovi, 0, stari_uglovi, 0, uglovi.Length);

}

else

{

Get_parameters();

if (!Array_Sequence_Offset(uglovi, stari_uglovi, UDPAngleOffset))

{

Array.Copy(uglovi, 0, stari_uglovi, 0, uglovi.Length);

UDP_client_SW.UDP_send_string("ugaol_x:" + uglovi[0] + ";" + "ugaol_y:" + uglovi[1] + ";" + "ugaol_z:" + uglovi

[2] + ";" + "ugao2_x:" + uglovi[3] + ";" + "ugaol_y: " + uglovi[U] + ";" + "ugao2_z:" + uglovi[5] + ";" +

"ugao3_x:" + uglovi[6] + ";" + "ugao3_y:" + uglovi[7] + ";" + "ugao3_z:" + uglovi[8] +";" + "ugaoll_x:" + uglovi

[9] + ";" + "ugaol_y:" + uglovi[10] + ";" + "ugaoU_z:" + uglovi[11] + ";" + "ugao5_x:" + uglovi[12] + ";" +

"ugaol_y:" + uglovi[13] + ";" + "ugao5_z:" + uglovi[14] + ";" + "ugao6_x:" + uglovi[15] + ";" + "ugao6_y:" +

uglovi[16] + ";" + "ugao6_z:" + uglovi[17] + ";" + "ugao7_x:" + uglovi[18] + ";" + "ugao7_y:" + uglovi[19] + ";"

+ "ugao7_z:" + uglovi[20] + ";" + "\n");

MWF = true;

}

}

}

We can split the algorithm into two main parts, if part and else part. In if part Unity is taking data (joint angles) from SolidWorks when there is something in the Buffer (sent from SW) and in else part Unity is sending data (joint angles) to SolidWorks when there is a change in robot configuration. Thus, the exchange is event driven, as Unity is sending data only when there is the difference in angle configuration in Unity scene. Same applies to SW.

Lab visit:

During the second visit we had the opportunity to try the VR optical device and to experiment a virtual walk in a static scene. The scene contained the robotic welding scene and we had the chance to look directly and in a very immersive way at its characteristics.

DAY 3

Morning session

Objective: RWC DIGITAL SHADOW Integration

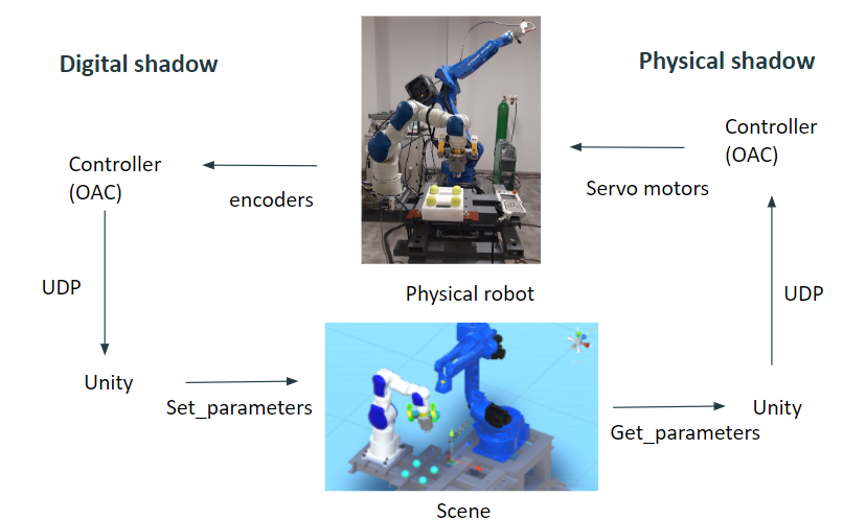

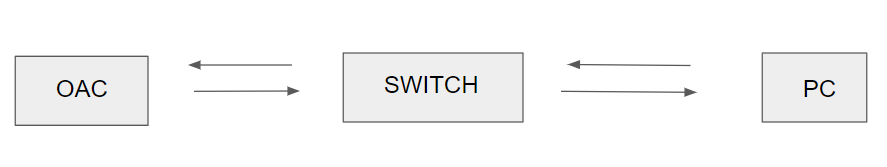

During the morning session, we began by gaining an understanding of what digital twins and digital shadows are. From there, we delved into the connection between real robots and Unity. We analyzed an UDP code to better grasp this connection

- Introduction to what is a digital twin and also a digital shadow

- We start talking about connection between real robot and unity

- In order to do that we analyze an UDP code

-

We study what is an OAC (Open Architecture Controller) which is the necessary kind of controller to enable the communication.

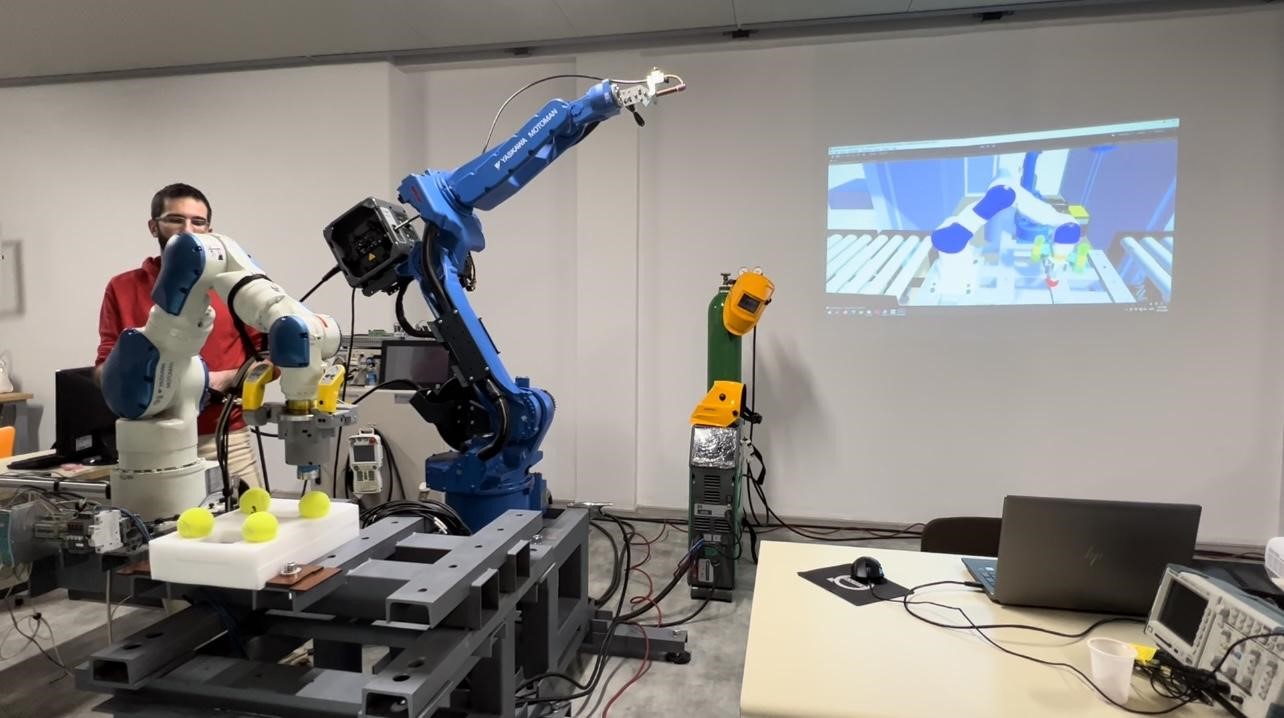

- So we tried what we studied in particular we used Oculus to see the digital shadow working while another team member was moving the physical robot with the teach pendant

- we came back to study the code to understand how the data (angles) are send from Robot Controller to Unity, and replicated in real time in the software (DigitalShadow)

- These angles are also saved by Unity in a file so they can be played later both in software and back to the physical robot (PhysicalShadow)

Lab visit

Afternoon session

Objective: Manipulating the robot Manually

- First explanation of the manual robot controller, acquiring technical information about the sensors used to transfer human input forces to the controller of the robot.

- Analysis of load cells and the wheatstone bridge mechanism upon which they can work.

- Introduction to the mechanical structure of the haptic device, in particular an analysis of the spring system together with the safety switch and with all the 3d printed components.

- Moving the two virtual robots with OCULUS joysticks in VR.

- Have the opportunity to see the robot manual controller in action, maneuvered in order to generate a trajectory and a job for the robot.

- Visualize the trajectory generated on matlab, filter it and visualize its 3d representation.

- Observing an expert maneuvering the physical robot through virtual reality by using OCULUS system, and that was the first experience with a digital twin.

DAY 4

Morning session

Objective: Operation of the integrated RWC Digital Twin and GAME Based LEARNING

- XRProgramming by Demonstration

- Each team member programmed the robotic arm to complete the touch and jump task

- In order to do the second point we use different kind of command: a) using KINESTHETIC haptic controller b) using KINESTHETIC haptic controller and XRHMD visual feedback c) using TELEOPERATION haptic controller and XRHMD visual feedback

- The learned robot trajectory will be recorded, from the first movement of the robot until the last point of the task and then reproduced by the virtual robot in its virtual task space and by the physical robot in its physical task space.

Lab Visit

We went to the lab and we start to program a physical robot to complete the Touch and Jump task. In order to do that, first we used a KINESTHETIC haptic controller without VR and after that we used it. We did that to program the robot by Demonstration (PbD).

Afternoon session

Objective: Operation of the integrated RWC Digital Twin and GAME Based LEARNING

In the afternoon session we focused on the new lab visit and in the making of the final presentation.

Please summarize the important steps for being able to repeat the protocol :

Lab Visits

In the second lab visit we had the same task to do but this time we used the TELEOPERATION haptic controller, for the first time we used it without VR and the second time with VR.

DAY 5

Objective: Operation of the integrated RWC Digital Twin and GAME Based LEARNING

In the morning session we did our last lab visit and finished our final presentation. Inbetween, we filled in the necessary questionnaires made by Marta (psychologist) and gave an interview.

Lab Visit:

In our last lab visit we had similar task to do but this time we used only VR technology. So, for robot movement we used Oculus Rift s controllers, by holding an appropriate button we were able to control TCP of the robot. It was not connected to the physical system, thus the speed of the robot was not limited by its physicality and we were able to complete the task swiftly.