Design a Human Machine Interface for AR

The purpose of the study is to design a Human Machine Interface (HMI) in AR for digital twin. Allowing the visualisation and interaction with the indicators that are part of the Supply Chain management (Production, Logistics, Maintenance, etc) thanks to the HoloLens 1. The indicators must be linked to a 5 axis CNC machine. The interface also aims at the possibility to control the machine and to simulate certain scenarios.

Poster

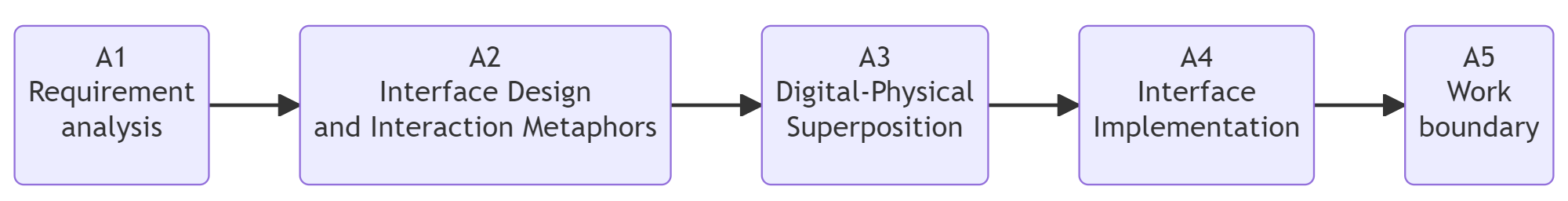

Workflow development

| INPUTS: Physical CNC Machine - 3D Models |  | OUTPUTS: AR interface for enhanced CNC Machine operation |

Workflow Description

| Acitivities | Overview |

|---|---|

| A1 Requirement analysis | • Description: Starting with an in-depth analysis of the case study. In this case, the CNC machine was not in operation, It would be necessary to make a more in-depth study by observing an operator at work, identifying the needs more precisely, knowing what kind of information the digital 5-axis machine give and considering ways to improve its visualization and accessibility using augmented reality (AR) technology. • Input: CNC Machine, current and historical data • Output: A detailed report about the value-added of RA in the operational machine context. • Control: Feedback from operators and technicians, correlation between indicators identified for RA and workstation needs • Resource : Machine operator, technician, machine operation manual |

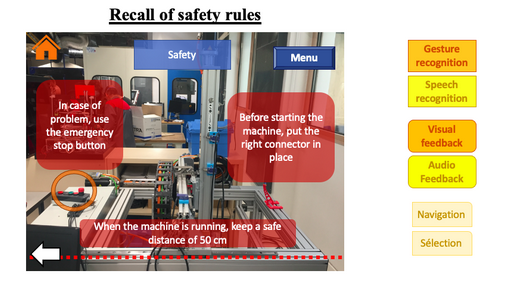

| A2 Interface Design and Interaction Metaphors | • Description: Using the virtual content to be rendered in AR (3D Models, incadores, information) as a foundation, conceptualize the interface and the possible types of interactions for de Human Machine Interface. Human → Machine functions (Gesture Recognition, Gaze Recognition, Speech Recognition) and the Machine → Human functions (Visual feedback, Audio feedback, Haptic feedback). • Input: AR enhancement opportunities • Output: AR interface design, including interaction methods and device feedback • Control: AR interaction effectiveness • Resource : UX/UI concepts, AR development tools (Unity 3D and MRTK) |

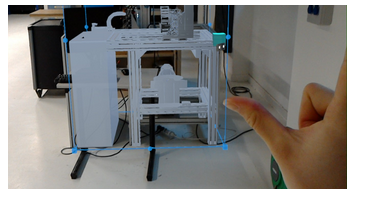

| A3 Digital-Physical superposition | • Description: Starting the development process requires knowledge of a platform capable of building and deploying AR. In this scenario, Unity 3D and MRTK have been selected. The next step is to implement a system to superimpose a digital copy of the machine over the real one, placing the virtual content accurately in the physical space. Manual overlay could be an option; however, it proved ineffective due to the large size of the machine and the restricted field of view offered by HoloLens I.  To improve this procedure the best option is to use the anchoring via a QR code. • Input: The digital representation of the machine • Output: Superimposition of the digital copy on the real machine • Control: Precision of superposition and stability of AR tracking, • Resource : Unity engine, MRTK, C# for scripting, HoloLens I |

| A4 Interface Implementation | • Description: Creates an interface using MRTK’s built in UI/UX elements, which include a holographic menu with multiple “views” or pages. They provide functions to place, show, and hide different pages as needed. A key aspect of the interface is the ability to follow the user’s gaze, ensuring that the menu is always visible to the user. This is an additional challenge due to the latency and fluidity issues of gaze tracking.  • Input: AR interface design • Output: A functional and interactive AR interface • Control: User feedback • Resource : MRTK UI/UX Design principles |

| A5 Work boundary | • Description: A last important feature is the incorporation of a safety zone, by using a “safety on/off” function that dynamically creates a visual safety zone (in the form of a cube) around the machine. This function is controlled by a button in the AR interface menu. When activated, the safety zone becomes visible, indicating the boundary to maintain safe operation. • Input: Machine dimensions and safety parameters • Output: A visible highlight safety zone in AR that can be toggled on and off by the user • Control: Dimentions and user feedback • Resource: Unity engine, MRTK, C# for scripting, HoloLens I |

Results

We obtain an accurate enough anchoring and an interactive interface that is useful for an operator. The design of the interface has to be improved. The disadvantage is that with HoloLens 1, to select an object, you have to point it by moving your head. So, it can be a bit tiring and uncomfortable. The Hololens 2 may resolve this problem and give even more possibilities !

Conclusion

This is a method to anchor a digital and a real machine and to create interactive interfaces. To achieve this, you need a back ground in programming and robotics. After the success in anchoring the real and the virtual machine, creating the interface and the information interactions, the next step would be to be able to collect the datas and be able to interact on the real machine by interacting on the virtual one.

Avatar for me

When welcoming the students for the oral exam of “Génie industriel - Grenoble INP”, I showed and discovered the R room with all these fascinating technologies. When I received the email presenting the project, I took the opportunity to learn how to use these tools ! In fact, I had no skills in XR but I had a background in CAD, kinematics and code editing. Then, I was interested in working on application of VR and AR in industry within the Supply Chain (to improve the process and reduce any kind of waste for instance).Thus, I started to research virtual reality and augmented reality applications in the Supply Chain.

As an engineering student, I was excited to develop competencies in innovative technologies for the industry 4.0 that are useful for industrial performance by taking into account social and human factors. I have made a lot of progress and still have so much to learn about these incredible tools. I really would like to improve myself and learn others steps in order to find good uses of AR within the Supply Chain and more specially to improve the environmental performance.