Book Note:

- General review

Navigation Structure

Table of contents

Teaching workflows

Following the direction we described as the main teaching learning method we must detail the AVATAR teaching workflows.

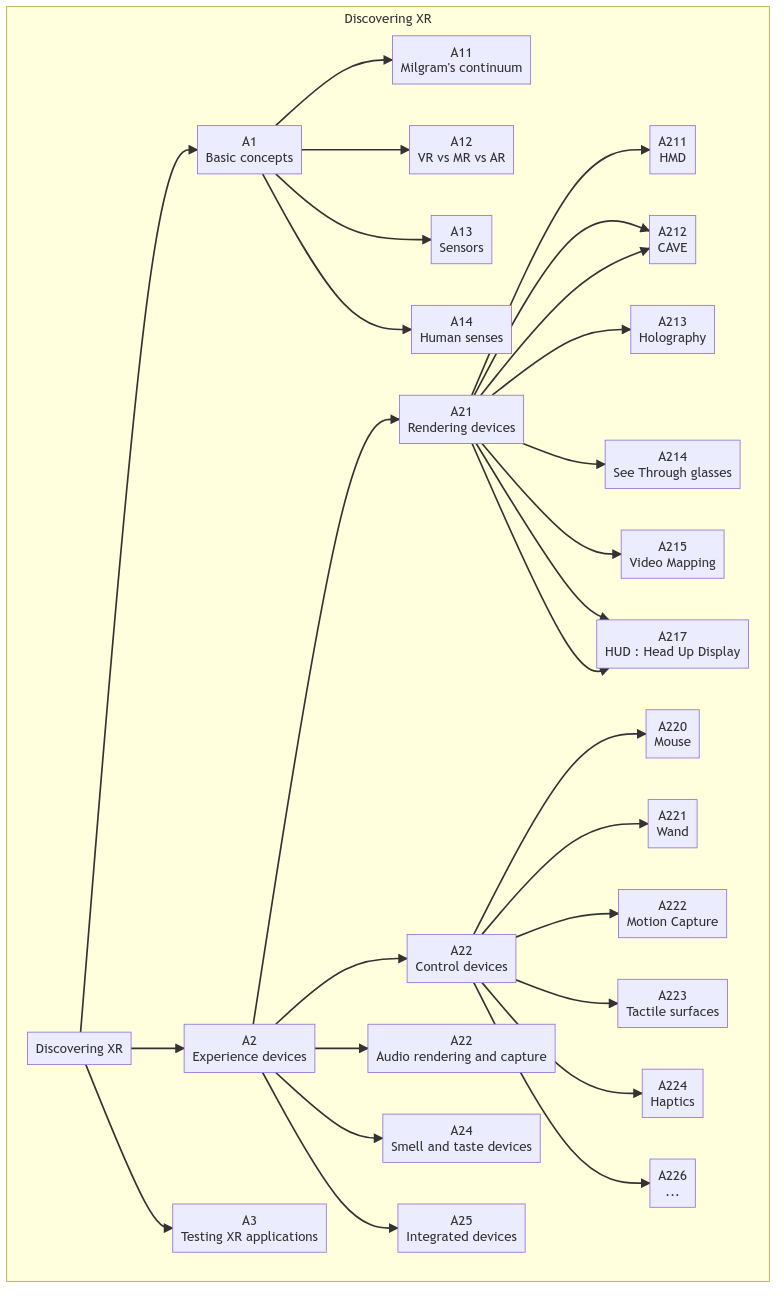

Discovering XR

The basic concepts and an initial practice are learned through this process. The perception of more or less presence in various devices (HMD, CAVES, etc) is a very first step to understand XR. It is also a major step to distinguish what is realistic today or in the next few year respect to commercial announcements.

The process is decomposed into 3 main steps :

-

XR concepts definitions and presentations. Milgram’s continuum enable to discuss the variety of conceptual application from Augmented reality to Virtual Reality. The description of the main sensors used in VR and AR is also an important knowledge to understand capabilities and limits of context and human operator trackings. Human sensors and senses are also expected to explain hox VR

-

Experience device to test perceptions. There is a wide range of device about visual rendering, or for controlling XR scenes including other senses. It is never possible to let student test every potential device but to demonstrate various type of device which create a view about XR heterogeneity which is not limited to Head Mounted devices

-

Experience integrated applications. It is also important to get demonstration of existing applications.

This three steps are detailed in the following graph.

Conceptual roadmap for the discovery of Extended Reality

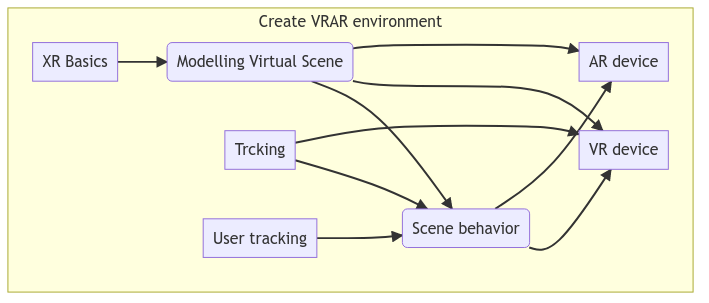

Developping XR environments

Training students to develop new XR environments expects first to get a basic knowledge about XR. The previous step is dedicated to this initial requirement.

-

The development requires always to create 2D or 3D scenes. Many tools are existing to create visual scenes but XR is mainly based on images and polyedra models. Then the expert models must be converted into XR scenes. Conversion tools must be explained and the conversion parameters must be clearly understood. Modelling Virtual Scene is a mandatory step.

-

The 3D scene is clearly static and does not provide interaction. The second step for developping a XR environment is the integration of behaviors by developing small scripts or by interconnecting complex simulations.

-

Basics about tricking perception senses and how to link user tracking to the scene behavior must be introduced here to undertake them towards qualitative XR environemnts.

-

Then the connexion to AR ou VR device enable to finalize a first level of XR environment. This include controller mapping and compilation of the application.

Workflow for Creating a VR/AR Environment: From XR Basics to Device Integration

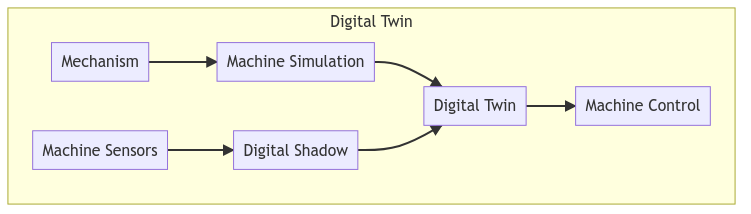

Spécification of industry 4.0 environment

AVATAR also developed a specific application workflow towards a machine digital twin development. This workflow is complementary to the development of XR environments and focus on creating a scene including behaviors. A cornerstone is the integration of a machine behavior. A machine is a specific mechanisms where kinematics model drive the underlying behavior. The kinematics model must be executed by a simulator. Various simulators exists from modules integrated in CAD modelers (Solidworks, Creo, Catia, Nx, etc.) or from physics game engines (ODE, bullet, PhysX). A machine is usually also observed through various sensors. The real time connection to these sensors enable to animate the virtual scene to follow the physical behavior reaching a digital shadow concept. The integration of the digital shadow with machine simulation create a digital twin which enable machine control from the virtual space.

Workflow representing the components and interactions of a Digital Twin system.

All these steps are participating to training students to specify a digital twin of machine for industry 4.0 context. Most projects with students during the AVATAR projects wer based on this application, controlling either a machine, a robot or more complex manufacturing cells. It requires to pass through the two previous workflows.

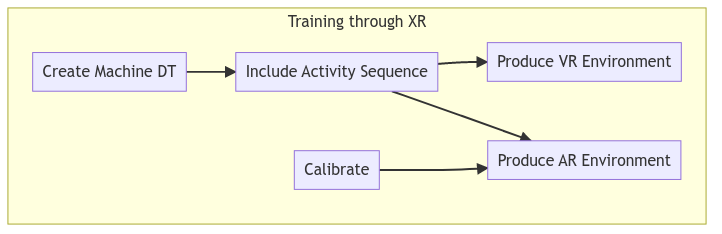

Developping training and assesment environment for machines

The last training workflow experienced within AVATAR concerns the use of XR to train students to use a specific machine and to assess this training. In this case we must first create a machine digital twin following the previous training workflow. Indeed here this step is assumed to be passed. The digital twin environment is ready. Here a specific usage of the digital twin is expected providing predefined sequences of tasks. Machine training is operated by following and repeating these sequences of tasks. To produce a XR environment integrates the “Developing XR environment” workflow where one must pay attention to a calibration step where AR environment is expected. Obviously all these steps requires to define the various way to ensure them. For exemple the calibration can be processes through tag recognition, through SLAM techniques with predefined anchors, through image analysis, etc.

Workflow illustrating the training process through XR