AVATAR XR Development Workflows

Workflow description

This chapter aims to provide a structured process to develop a XR environment. It is mainly based of the description of the development process through workflows formalized within a IDEF-X methodology. That means that development is viewed as a set of activities. Every activity is described by its input and output, the controls that manage the activity and the resources expected for the activity (either models, software, hardware, etc). As any IDEF-X functional description an activity can be decomposed in sub-activities which should be presented within the same format.

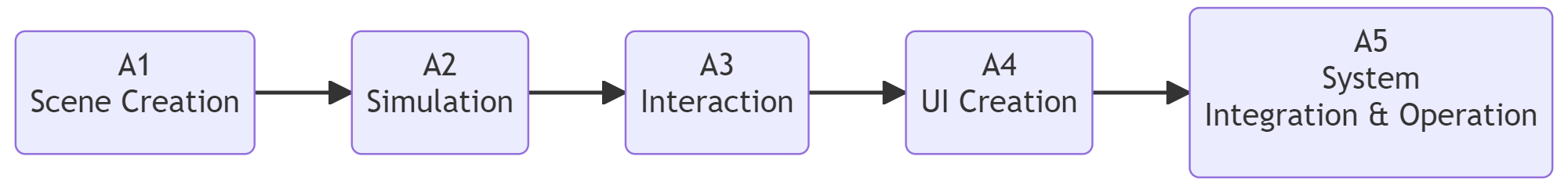

At the top level the XR development process is decomposed into 5 main activities :

-

XR requires the construction of a virtual scene, by defining 3D objects that will be visualized in the virtual, augmented or mixed reality environment. These objects have no intrinsic behavior and would remain static in the XR environment if no other development is proposed.

-

Enabling simulation of object behavior is a first method to provide a dynamic scene, and the variety of simulation is proportional to the number of physical behavior we expect to simulate in the XR environment.

-

The objects are not alone in the XR environment and must react to human behavior. That the matter of interaction which needs a complete activity to enable interactive modes.

-

Interactions must be organised to be easily understood and handled by the operator using the XR environment. That is a User Interface creation activity to specify, propose and enable the correct interaction metaphores.

-

At last but not least the output of the previous activities must be integrated to make an executable environment that will be successfully operated.

Then, the general workflow structure is composed of five main activities, building blocks as described above

|

General AVATAR workflow

Each of these five building blocks consists of a number of sub-activities that are described in the next sections. A first summary is provided hereafter but every activity is described in a more complete section.

When proposing workflows it is not easy to be fully generic. XR may be applied to many domains including arts, healthcare, sports, gaming or many industrial applications. In our case we expect to almost apply our workflows to design and manufacturing applications. Even if we are convinced that the workflow will remain basically the same for every application domain, we must highligth that we were mainly focussing on specific type of use cases. which are illustrated in the next section.

The workflows where also tested with students every year all along the AVATAR project (3 years). These student projects are integrating the various activities and are not always reported sub-activity by sub-activity. Nevertheless, it is a good basis of illustrations. The three last book chapters are providing these development examples and are often cited within several sub-activities description to illustrate what we are developping.

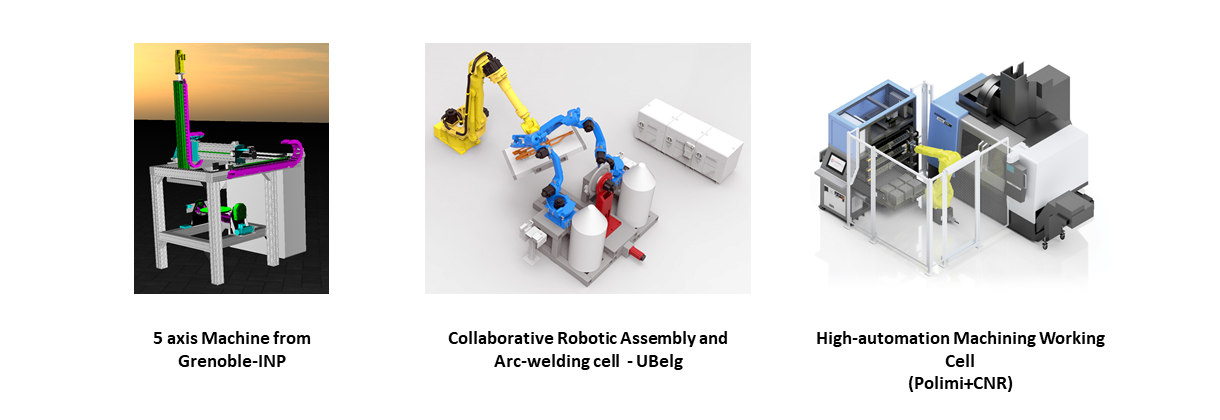

Assumption and focussed use cases

As previously explained, XR is applicable to many domains. Within AVATAR, we expected to almost address design and manufacturing issues. For example, we expect to be able to manage in Virtual Reality or Augmented Reality manufacturing machines. That means that we must access 3D models of these machines and animate them with mechanical simulations about kinematics but also about control command issues. Projects and tests with students were developped on the following machines. An 5 axis hybrid machine (mixing milling and additive manufacturing), a collaborative robotic assembly and arc welding cell and a complete working cell. The objective of this use case was to use XR technologies to create a digital twin of a automated machines for industrial production.

Manufacturing Environment for a Machining Center

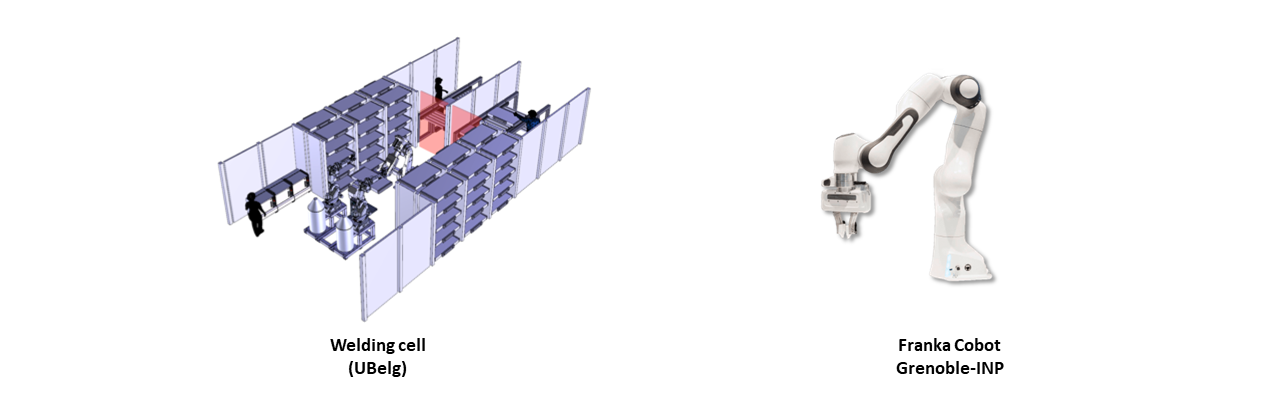

But indeed the digital twin of a machine is not usefull if we do not introduce operators in the process. We thus also took care that the proposed workflows could manage human activities in relation with machines and we specifically use complex welding cell or collaborative robots (cobots) to match this expectation.

Hybrid Human - Robot Processes

Summaries of the top level activities

The targeted study problem is the creation of a Digital Twin focused on two case studies, Hybrid Manufacturing Environment for a Machining Center and Hybrid Human- Robot Processes. To support the students, a general Workflow was defined, coupled with a series of lectures and coaching from local and international teachers.

A1 - Scene Creation

Describes a series of steps to create a 3D geometric model of a manufacturing system suitable for implementation in an extended reality environment and also a 3D representation of the user (avatar). The workflow uses a hybrid approach, combining computer-aided design (CAD) tools and artistic 3D modeling tools to create a 3D representation of a Digital Twin (DT) to be integrated into a rendering software with VR and AR capabilities.

A2 - Simulation

Explains the multidisciplinary process of building a simulation model for a digital twin. It details the overall activities involved in this process, starting with the definition and analysis of the system behavior, the development of this behavior accurately to reflect the responses of the physical system, the integration of the simulated data into a 3D structure for a virtual environment, and finally the calibration and tuning of the model to closely align it with the real-world system.

A3 - Interacion

Describes the essential activities for implementing interactions in an XR environment. The process involves defining interaction metaphors that reflect how users are supposed to interact with the environment, selecting interaction modes based on user inputs and system outputs, choosing the XR device which best suits the user’s needs, and characterizing assets based on interactive or non-interactive. Finally, the creation of behaviors on an XR development platform.

A4 - UI Creation

Describes the crucial steps involved in the development of a user interface in the context of human-machine interaction. The process begins with the sketching of a storyboard to expose the initial vision of the interface behavior. Then, the construction of the scene is established, along with the potential objects (actors) and the actions that can be performed on these actors. Three fundamental actions are detailed: selection (choosing an actor), navigation (moving within the scene) and manipulation (acting on an actor). The technical procedures of selection, navigation and manipulation depend on the chosen device and the correspondence of its sensors/actuators with these actions.

A5 - System Integration & Operation

Describes a series of activities designed to ensure that students can fully integrate and operate the developed Manufacturing Digital Twin System..This is intended to facilitate the seamless integration of the hardware and software components of all building blocks (A1 through A4), along with a physical manufacturing system such as a CNC machine tool or robot, culminating in a fully deployable and operational Manufacturing Digital Twin System with in-built Extended Reality Human-Machine Interface function.

A6 - XR-Based Learning

XR technologies can be used to provide an innovative environment for learning assessment. VR and AR can provide a powerful delivery mechanism for learning but, at the same time, can also be a more accurate tool for learning assessment and self-assessment. This class of workflow will address how to implement learning approaches and tools based on VR/AR environments.