A3 - Interaction

Workflow INTERACTION defines a set of main activities that must be completed to integrate interactions in an XR environment.

Interactions will determine the appearance and behavior of the XR environment, as well as the way the user interacts with and feels the XR environment. A good interaction will allow the user to interact comfortably and effectively with the XR environment to perform the defined tasks. A bad interaction will obstruct the user’s effectiveness, either by presenting a confusing and disorienting XR environment or by making interactions in the environment difficult or impossible to perform.

Workflow Structure

| CONTROLS: Expected perception - Interaction requirements - Device capacity - Device capacity - Expected behavior | ||

| INPUTS: XR environment |  | OUTPUTS: XR Environment with Interactions and behaviors |

| RESOURCES: XR environment - Activity description - Available device - Expected behavior - APIs, Rendering system, Scripts |

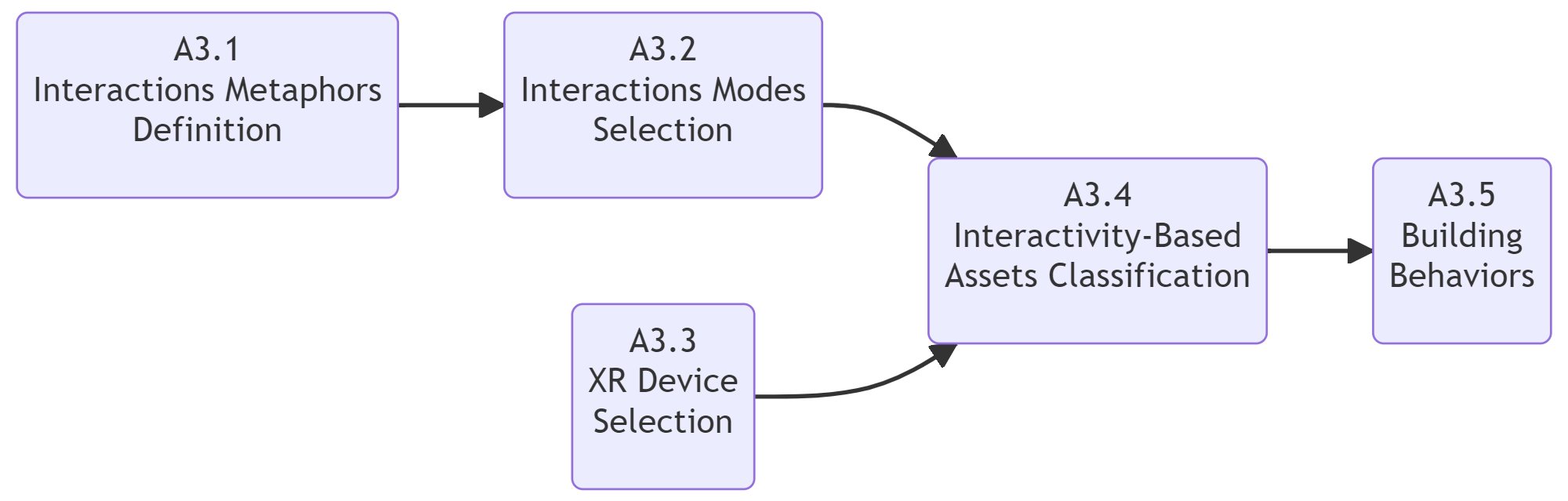

Interaction workflow

Workflow Building-Blocks

| Acitivities | Overview |

|---|---|

| A3.1 Interactions Metaphors Definition | • Description: Metaphor refers to the way in which the user is supposed to relate to the XR environment. Based on the use case and expected perception define the metaphors of interaction (navigation/selection/Manipulation). To create a metaphor interacion;: 1) Define the activities that the user can and cannot do in the XR environment 2) Decompose each activity into elementary tasks 3) Create the interaction that corresponds to each task • Input: XR environment • Output: Interactions metaphors based on a particular use case • Control: Expected perception • Resource : XR environment |

| A3.2 Interactions Modes Selection | • Description: Select the inputs and outputs according to each interaction. Inputs come from the user (hand tracking, eye tracking, controllers) and outputs (sounds, haptics, colors) come from the virtual environment in response to an interaction. Use inputs/Outputs to consolidate the feeling of presence and improve the performance of a task. • Input: Interactions metaphors • Output: Interactions modes • Control: Interaction requirements • Resource : Interactions and description of activities |

| A3.3 XR Device Selection | • Description: Select the XR device that matches both metaphors and interaction modes by list its degrees of freedoms and describe which dof will be mapped into selection, navigation or manipulation. • Input: XR Devices Output A1 and A2 • Output: Mapping between device and metaphors • Control: Device capacity respect to metaphor parameters • Resource : Available device |

| A3.4 Interactivity-Based Assets Classification | • Description: A large number of interactive assets can result in a poor frame rate leading to an undesired experience or even cyber sickness. This is why it is important to identify and limit the number of interactive assets for the user considering the added value of the interaction to the expected perception. • Input: XR Environment and XR Device • Output: List of interactive and non-interactive asets • Control: Frame rate • Resource : Available device and XR Environment |

| A3.5 Building Behaviors | • Description: Build the behaviors between the user interactions and the XR environment. These behaviors are based on a trigger event that calls an action that has been assigned and parameterized. Behaviors can be generated directly by the rendering system, by external APIs or by scripts. • Input: XR Environment and XR Interactions • Output: XR Environment with Interactions behaviors • Control: Frame rate and Expected behavior • Resource : APIs, Rendering system, Scripts |