A3.2 - Interaction Modes Selection

In order to create immersive experiences for VR/RA, it is essential to select the interaction modes. Interaction modes are defined in terms of inputs and outputs that facilitate the user’s interaction with the virtual content.

- Inputs For VR, head tracking, allows tracking the user’s position and rotation. In addition, controllers serve to represent the user’s hands and allow other types of inputs such as buttons, triggers and touch.

For AR, in Head-Mounted Displays, hand tracking is a widely used method due to its intuitive nature and similarity to real-world interaction. Hand gestures, such as pinch, swipe, and tap, allow users to control various features and functions.

- Outputs

For VR the main feedbacks are visual and tactile. Visual rendering provides to the user the visual composition of the virtual environment, including colors, textures, and a virtual representation of their hands. Haptic feedback provides the user with a tactile feedback each time they interact with a virtual object.

For AR Hand tracking without haptic feedback and depth perception, it can be difficult to know how far away the hand is from an object or whether it is touching it. It is important to provide sufficient visual cues to communicate the state of the object.

- Near interactions:

Hover (Far)  Highlighting based on the proximity of the hand. | Hover (Near)  Highlight size changes based on the distance to the hand. |

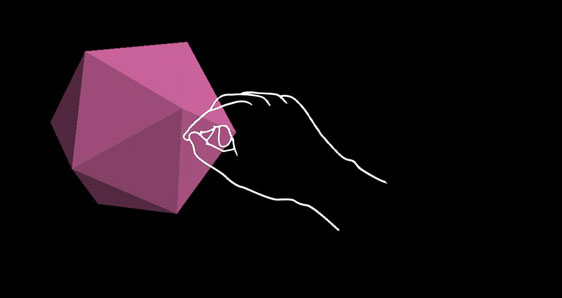

Touch / press  Visual plus audio feedback. | Grasp  Visual plus audio feedback. |

- Far interactions:

For any objects that user can interact with gaze, hand ray, and motion controller’s ray, we recommend having different visual cue for these three input states:

| State | Image | Description |

|---|---|---|

| Default State |  | Default idle state of the object. The cursor isn’t on the object. Hand isn’t detected. |

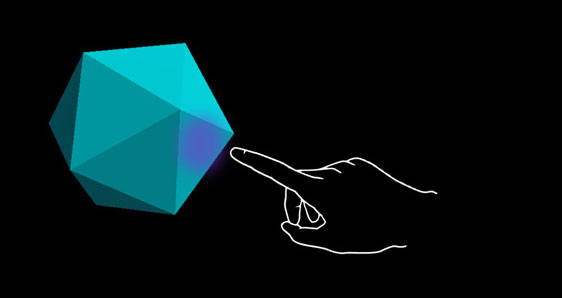

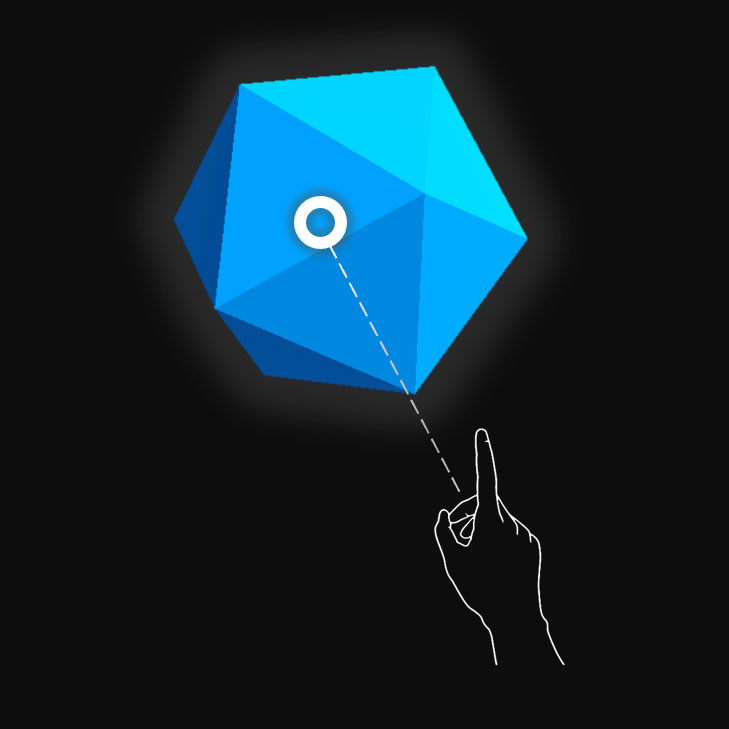

| Targeted State |  | When the object is targeted with gaze cursor, finger proximity or motion controller’s pointer. The cursor is on the object. |

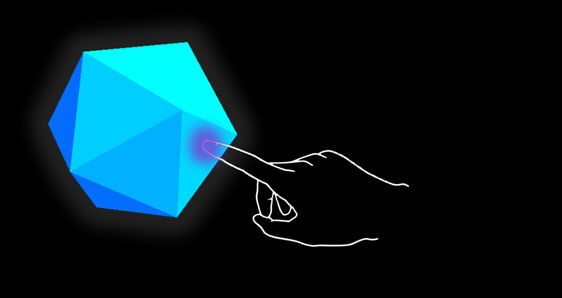

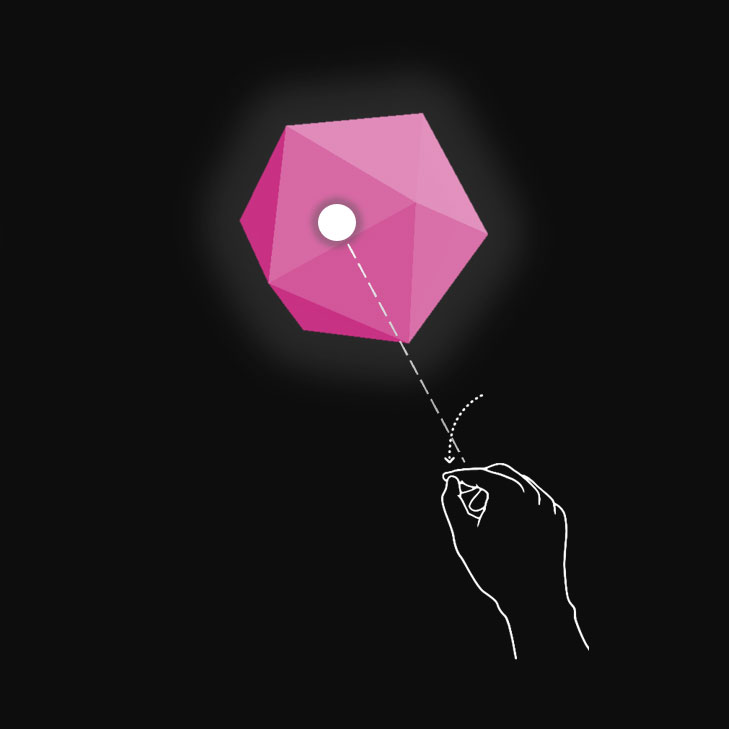

| Pressed State |  | When the object is pressed with an air tap gesture, finger press or motion controller’s select button. The cursor is on the object. Hand is detected, air tapped. |

Input

Detailed guidelines on interaction modes in XR, emphasizing user inputs such as head tracking and hand gestures. Detailed description of the various output (feedback) mechanisms and specific near and far interaction techniques.

Output

An intuitive XR application that delivers immersive experiences by leveraging rich user-centric interaction modes to facilitate a variety of interactions.

Control

- Input-based interactions

- Incorporate feedback mechanisms, primarily visual and, where possible, tactile

- Ensure a smooth transition between interactions

Resources

- XR development environment for developing

- Documentation for the design and creation of interactions