L2 - Human-Robot Collaborative Assembling Processes

The goal of this workflow is to show how a manufacturing operation (e.g. an assembly) can be simulated in VR environment when machines (e.g. a cobot) and humans operates together in an hybrid setting. The goal of this Task is to add a humanoid avatar to a 3D virtual scene where a manufacturing process of interest is shown. In particular, the process will feature a Cobot and a virtual human avatar collaborating to the process. Such a virtual scene can be experienced by a trainee by means of a head mounted display so that he or she can acquire the skills required to perform the same set of operations. The sequence of activities required to implement such a training tool is composed by integrating the workflow “A1 - Scene Creation”and “A2 - Simulation”with the following one.

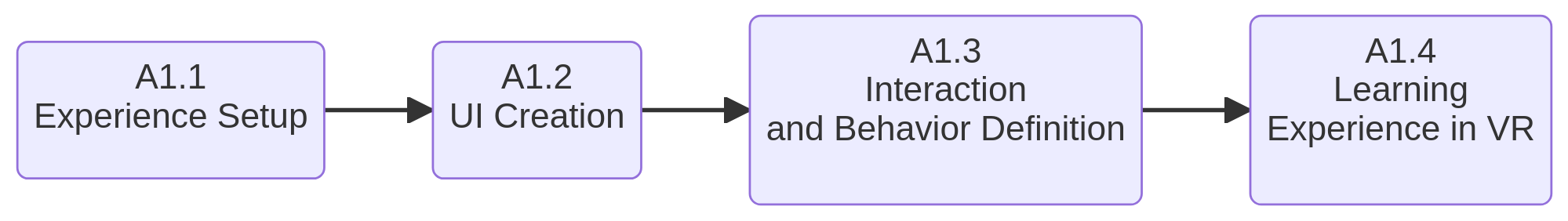

Workflow Structure

|

Human Avatar Integration Workflow

Workflow Building-Blocks

| Acitivities | Overview |

|---|---|

| A1.1 Actions Definition | • Description: the goal of this activity is to define one or more complex manufacturing sequences of interest that are human performed in cooperation with a cobot. The selection is made based on training relevance criteria of the selected sequence. Each chosen sequence is split into a set of actions. Each of these actions is defined as a discrete part of the human performed manufacturing operation. Actions should be as short as possible, but semantically recognizable, e.g.: tightening a bolt, screwing, pulling a lever. • Input: a set of relevant manufacturing human-performed complex operations • Output: a set of relevant manufacturing human-performed complex operations Output: a document that describes for each of the selected operations, a sequence of actions in which it can be spit. The making of this document must consider how to split complex movements into more atomic ones, so that the reuse of each of them for different tasks is possible. • Control: • Resource: Knowledge of the manufacturing operations performed by human professionals in the context of interest. |

| A1.2 Animations Capture | • Description: during this activity, a set of basic movements, or actions, will be captured from a human actor. Each of these actions will be chosen so that its recording can be combined with other recordings to create a sequence representing some complex human-performed manufacturing operation. • Input: a document containing the description of each action to be recorded. • Output: a collection of motion captured animation files. • Control: • Resource: motion capture devices. Motion refinement software (e.g.: Autodesk Motion Builder) |

| A1.3 Animations DB | • Description: this activity aims to create a database (DB) containing all the animation files captured. The entries of this DB must be tagged so to expose an easy and quick mean for access to the actions required to compose a sequence. • Input: the capture files of Task 1.0. • Output: the capture files of Task 1.0. • Control: • Resource: depending on the software solution chosen but should include some database engine or library. |

| A1.4 Action Composition | • Description: during this activity, the set of actions defined in Activity 1.1 is translated into a sequence of animation files that collectively can be used to animate a virtual human 3d character, making it move as if realistically performing the manufacturing process of interest. • Input: the actions animation entries of the database of activity 1.3. • Output: set of animation files sequences. Each set is assembled with a sequence of captured actions that collectively represent the human performed manufacturing operation of interest. • Control: • Resource: some software able to retriever DB entries according to search criteria. |

| A1.5 Trainee’s Attributes | • Description: In order to make the VR Training Experience the most realistic possible for the trainee, his or her physical attributes must be collected. By means of on-the-shelves software and sensors, the biometric data of the user are captured. The number of data collected should be coherent with the expected realism level of the final VR Training Experience. • Input: biometric data from sensors. • Output: digitalized trainee’s biometric attributes. • Control: • Resource: software able to collect biometric data using on-the-shelves sensors like, e.g.: the Occipital Structure Sensor. |

| A1.6 Avatar Configuration | • Description: the virtual 3d human character that will be used by the trainee to interact with the virtual world during the VR Training Experience is configured to replicate trainee’s physical attributes. • Input: trainee’s biometric attributes and a 3d rigged human character. • Output: the trainee’s humanoid avatar • Control: • Resource: character animation software, able to modify the 3D character rigging as well as its other selected physical attributes. |

| A1.7 Scene Definition | • Description:The virtual scene replicating the manufacturing facility as well as all the industrial machinery and the tools are assembled during this activity, according to the training objectives of interest. 3D models must be acquired either from those freely available on the web, either custom made starting from CAD models. This activity includes importing the 3d virtual configured avatar and the set of animation files to animate it, as well as the cobot model with its operations definition. • Input: 3d models of the facility and tools to be used. The configured avatar and the animation sequences of the human performed manufacturing operations of interest. • Output: the assembled 3d virtual environment • Control: • Resource: a software platform able to function both as graphical engine, both as game engine, like Unity. The 3D models. The software to operate the virtual cobot. |

| A1.8 VR Training Experience | • Description: The software logic for synchronising avatar and cobot movements must be implemented during this activity. Logic will be developed according to which user experience will be offered to the trainee. Two training mode are possible: during the first one, the trainee can observe his or her avatar performing the correct sequence of actions, collaborating with the cobot, while during the second, the trainee takes control of his or her avatar and learn the manufacturing sequence by doing it. • Input: the scene defined in activity 1.7. • Output: the VT Training Experience ready to use. • Control:some experienced professional able to evaluate the learning curve of the trainee. • Resource:according to the platform selected during activity 1.7, software programming skills are necessary to implement the VR Training Experience’s logic. |