A5.3 - Digital Twin Integration

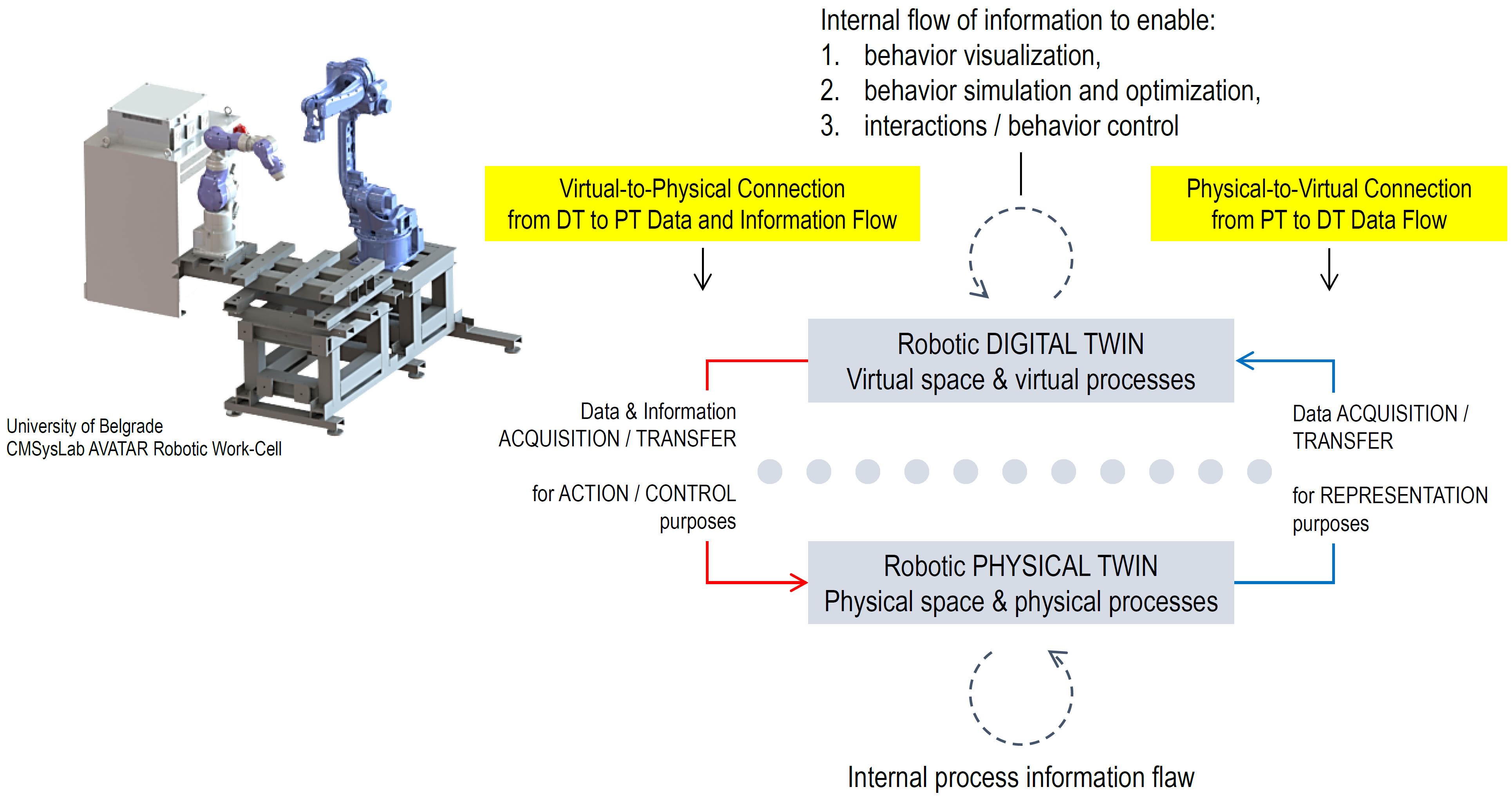

The Manufacturing Digital Twin concept revolves around the bidirectional exchange of data and information between the physical and virtual spaces, fostering real-time interaction between these two worlds. Real-time data streaming from the physical space generates the ‘Digital Shadow,’ ensuring synchronization of the virtual replica with its physical counterpart. Conversely, information flow from the virtual to the physical space, although not always in real-time, enables the physical execution of simulated behaviors originating in the virtual space.

Of course, within both the physical and virtual spaces, there are internal processes where data and information flow autonomously, without the necessity of exchanging information between the two worlds. These internal processes are responsible for the individual functioning and behavior of each space independently. The internal data flows in the physical space pertain to the real-time interactions, actions, and responses of the physical manufacturing entities, while the internal data flows in the virtual space govern the behaviors, simulations, and interactions of the digital replicas.

The bidirectional data exchange between the physical and virtual spaces occurs when there is a need to synchronize and align the states and behaviors of the digital replicas with their physical counterparts, or when the simulation results from the virtual space are to be executed in the physical space. This exchange ensures that the virtual and physical worlds remain connected, facilitating the efficient operation and optimization of the Manufacturing Digital Twin system.

For the use-case of Robotic Work-Cell Digital Twin (RWC DT), the aforementioned data and information flows are depicted in the following figure.

Robotic Work-Cell Digital Twin flow

Integration of the Manufacturing Digital Twin is strongly influenced by the manufacturing process and the associated manufacturing equipment. In case of robotic use-case, i.e., Robotic Work-Cell Digital Twin integration (RWC DT integration), the following workflow should be applied:

Digital Shadow integration - Integrating the digital shadow of a physical robot, or more completely the RWC in a Unity project involves capturing the robot’s movements and actions in real-time and then replicating those movements onto a robot 3D representation within the Unity scene. Here’s a high-level workflow to achieve this integration:

A5.3.1.1 Robot Tracking and Data Capture:

Achieving this functionality can be accomplished by utilizing encoders on the joints of the robot arm in conjunction with a Robot Open Architecture Control system (Robot OAC System). The Robot OAC offers a versatile and open control system for the physical robot, facilitating real-time communication and control of various robot parameters, including joint angles, motion profiles, Cartesian coordinates of the robot’s tool center point (TCP), joint torques, and more. For instance, the YASKAWA MotoPlus SDK enables the development of the Robot OAC system. The YASKAWA MotoPlus SDK enables real-time access to the YASKAWA robot’s joint angles and relevant data, providing a seamless interface between the physical robot and external applications. This entails coding a series of application routines in C# and subsequently downloading them into the robot control system.

A5.3.1.2 Data Processing and Conversion:

Process the data captured from the robot to extract its position and motion data. Convert this data into a format that Unity can understand and use to animate the robot digital replica / Digital Shadow.

A5.3.1.3 Real-time Data Transmission:

Networking of Robot OAC and UNITY Project via UDP. Integration of the communication channel between the robot’s tracking system embedded in the robot OAC and the Unity project. This allows the real-time data of the physical robot configuration to be transmitted to Unity continuously.

A5.3.1.4 Update the Digital Shadow:

Create a C# script class in UNITY to receive real-time joint angle data from the Robot OAC through UDP. This script will continuously acquire and update the joint angles of the virtual replica to match the physical robot’s movements. This ensures that the Digital Shadow accurately reflects the movements of the physical robot.

A5.3.1.5 Visual Feedback and Interaction:

Add visual effects or indicators to the Digital Shadow to provide feedback on the robot’s status, actions, and interactions in the virtual environment. For instance, you can display status of the UI elements to indicate the robot’s state or, more generally, the state of the RWC DT System.

A5.3.1.6 Environmental Interaction:

Ensure that the Digital Shadow interacts appropriately with the Unity scene. This includes casting shadows on other objects, colliding with the environment, and responding to lighting changes.

A5.3.1.7 Testing and Calibration:

Test the integration thoroughly in various scenarios to verify the accuracy of the Digital Shadow representation, including latency. Calibrate the system as needed to fine-tune the alignment between the physical robot and its Digital Shadow.

A5.3.1.8 Optimization and Performance:

Optimize the integration for real-time performance to avoid any noticeable delays between the physical robot’s actions and the Digital Shadow’s movements in Unity.

By following this workflow, you can seamlessly integrate the Digital Shadow of a physical robot into your Unity project, enabling real-time representation and interaction with the robot’s movements and actions in the physical manufacturing environment. This integration is highly valuable for simulation, training, and visualizing the robot’s behavior in various scenarios. To accomplish this, two essential functionalities must be provided: (a) a recording function to store acquired robot motion, and (b) playback capability to replay the recorded robot motions as needed. To include the recording function for capturing robot motion in the Unity project and enable motion playback for future use in robot motion analysis, you can extend the Digital Shadow Integration workflow as follows:

A5.3.2.1 Data Recording Setup:

Create a C# script class in UNITY responsible for recording the robot’s joint angles and saving the recorded motion data in a suitable file format (e.g., ASCII CSV, JSON) or a dedicated database for future use. The saved data should be timestamped for accurate motion playback. This script will also include a function to read and play back the recorded motion data.

A5.3.2.2 Recording Trigger:

Create a user interface or a trigger mechanism that allows the user to start and stop the recording process in Unity. This can be achieved through buttons, keyboard inputs, or voice commands.

A5.3.2.3 Motion Playback:

Implement a motion playback system that reads the recorded data from file and animates the digital shadow model accordingly, off line. This allows users to replay the robot’s motions within the Unity environment.

A5.3.2.4 Playback Controls:

Provide controls for adjusting the playback speed, looping the motion, and seeking to specific timestamps to facilitate in-depth motion analysis and understanding.

A5.3.2.5 User Interface for Playback:

Design a user-friendly interface that allows users to load, manage, and play back recorded motion data efficiently. This interface should provide clear visual feedback and options for motion analysis.

A5.3.2.6 Motion Comparison and Analysis:

Enable the ability to compare multiple recorded motions side-by-side in Unity. This feature is useful for evaluating the robot’s performance under different conditions or comparing different robot models. Develop or implement motion analysis tools or scripts that can process the recorded data. This analysis can include studying joint angles, velocities, accelerations, and other relevant metrics for evaluating the performance and improving the behavior of the physical robot.

A5.3.2.7 Data Export and Import:

Implement functionality to export and import the recorded motion data, allowing users to share data between different Unity projects or external applications for further analysis and optimisation.

A5.3.2.8 Data Visualization:

Include visualizations or graphs to display motion data trends and patterns, providing valuable insights into the robot’s performance and behaviour. By incorporating the recording function and motion playback capabilities, you enhance the Unity project’s utility by enabling users to capture and analyse the robot’s motions accurately, save the data for future reference, and study its performance for research, training, or other purposes, including the most important one – robot programming. As already noted, in order to implement the Digital Shadow function effectively for a physical robot in Unity, it is essential to have an Robot Open Architecture Control system (Robot OAC System). Here are some important notes on the necessity of an open architecture control system:

- Data Accessibility: An OAC System allows easy access to real-time data from the robot’s sensors, actuators, and motion control. This data is crucial for capturing the robot’s movements and actions accurately for the Digital Shadow.

- Real-Time Communication: An OAC System facilitates real-time communication between the robot and the Unity project. It enables seamless transmission of positional and rotational data, ensuring the Digital Shadow stays synchronized with the physical robot’s movements.

- Interoperability: With an OAC System, you can establish standard communication protocols (e.g., TCP/IP, ROS) that allow the Unity project to interact with a wide range of robots regardless of their manufacturers or specific control systems.

- Flexibility and Adaptability: An OAC System allows for easy integration of custom or third-party sensors and peripherals that may be necessary for specific motion capture requirements. This flexibility enables you to tailor the Digital Shadow to the robot’s unique capabilities.

- Data Recording and Playback: An OAC System simplifies the process of recording and accessing real-time data, making it easier to save the robot’s motion data for future playback and analysis in Unity.

- Motion Control Parameter Access: With an OAC System, you can access and modify motion control parameters of the robot in real-time, which can be crucial for fine-tuning the Digital Shadow’s behaviour to match the robot’s actions accurately.

Overall, a Robot OAC System is a fundamental requirement for achieving a seamless and accurate Digital Shadow representation of a physical robot in Unity. It ensures data accessibility, real-time communication, and adaptability, empowering developer to create sophisticated Digital Shadow systems that enhance robot simulation, analysis, and interaction within the Unity environment.

Digital Twin integration - In addition to automatically replicating the physical system states into their virtual reality counterparts – Digital Shadow, the concept of mirrored spaces in the context of a Digital Twin also refers to automatically replicating virtual system states and behaviors into their physical counterparts. These two synchronizations allow for accurate representation of real-world actions within the virtual environment, and vice versa. In the case of robotic processes, there are two options for achieving mirroring of the virtual reality space into the physical space:

- a) Real-time Mirroring - problematic:

Real-time mirroring involves directly synchronizing the virtual system with the physical system in real-time. This approach can be challenging due to potential delays, communication issues, and safety concerns, as it may lead to hazardous collisions with other physical objects or humans. Achieving perfect real-time mirroring can be difficult, especially if the physical system is subject to real-world constraints and external factors that are not present in the virtual environment.

- b) Offline Mirroring - safe and allows optimization:

Offline mirroring involves creating a robot job code (a set of instructions written in a specific language applicable to a particular robot controller) based on processed recorded data of the virtual robot’s motion. The motion data is captured during virtual demonstrations, simulations, and/or interactions with a human operator. This job task is then uploaded to the robot controller using a suitable communication method (e.g., UDP, for instance).

This approach provides a safer and more controlled environment for optimization, as the job task can be thoroughly tested and verified in the virtual space before being executed on the physical robot. It allows developers to fine-tune and optimize the robot’s behavior in the virtual space, ensuring that the physical counterpart behaves as intended. The Digital Twin concept holds a key idea and practical technological value in robotic manufacturing processes by enabling simulation, programming, and testing of robot motions before actual execution on the physical robot. This approach reduces the risk of errors and allows for refining robot behavior within a safe virtual environment.

The essence of robot motion programming lies in creating the “robot job code,” a set of instructions written in a specific language applicable to a particular robot controller. Various techniques exist for generating robot job code. In the Digital Twin environment, users have the flexibility to control robot motion through pre-recorded robot job codes obtained from simulations or demonstrations (robot Programming by Demonstration), or through interactive programming with a suitable interface. This environment offers opportunities for effective robot motion programming, advanced robotics research, training, and simulation in a virtual space, while ensuring real-world applicability by enabling the execution of programmed motions on the physical robot.

By focusing solely on the functions of creating and transmitting robot job code from the virtual to the physical space, the following high-level workflow finalizes the process of creating a Digital Twin that integrates both essential functions - Digital Shadow and interaction between the virtual and physical spaces, achieved through the transfer of information and data from the virtual to the physical world:

The workflow for robot job code transfer within the RWC Digital Twin involves the following steps:

A5.3.3.1 Robot motion generation in the virtual environment:

Using the available interaction interface, generate robot motions in the virtual environment through demonstration or simulation, as required to execute the given task.

A5.3.3.2 Recording and Storing Motion Data:

- Record the robot’s motion data during virtual demonstrations, simulations, or interactions.

- Store data, including joint angles, velocities, accelerations, and other relevant metrics.

- Add timestamps for precise motion playback.

A5.3.3.3 Motion Data Preprocessing:

- Preprocess the recorded motion data to ensure accuracy and consistency.

- Optimize the recorded robot motion data and incorporate the required process features.

- Add timestamps for precise motion playback.

A5.3.3.4 Creating Robot Job Code:

- Perform Forward Kinematic Transformation (FKT): Use specialized software tools to apply forward kinematic transformation to the recorded robot joint angles. FKT calculates the position and orientation of the robot’s end effector (TCP) based on the joint angles, providing the robot task space coordinates.

- Segment Data into Kinematic and Action Primitives: Utilize specialized software tools to process and interpret the generated robot task space coordinates data. Apply segmentation techniques to divide the data into smaller, meaningful units known as kinematic and action primitives. Kinematic primitives represent elementary motion patterns, such as straight lines or arcs, while action primitives encompass specific robot behaviors required for the manufacturing process. Incorporate machine learning or pattern recognition algorithms to identify key motion behaviors and create reusable motion templates. This approach enhances the efficiency and flexibility of robot programming, allowing for the easy replication of complex robot motions and behaviors in both the virtual and physical environments.

- Convert Kinematic and Action Primitives into Job Code: Use specialized software tools to automatically generate robot job code from the segmented kinematic and action primitives. This job code contains a set of instructions necessary to accurately replicate the recorded and optimized virtual robot motion on the physical robot. Ensure that the generated job code is compatible with the syntax and capabilities of the physical robot’s control system. Perform any necessary translation or mapping to match the instructions with the robot’s supported commands and capabilities.

A5.3.3.5 Upload Job Code to Physical Robot:

- Safety Measures: Integrate safety measures within the digital twin to prevent unintended collisions or unsafe motions. Implement collision detection and constraints to ensure the robot operates safely in both virtual and physical environments.

- Set up the data transmission mechanism to send the job codes from Unity to the physical robot (such as UDP). This may involve using APIs, communication libraries, or protocols supported by the robot’s control system.

- Upload the generated robot job code to the physical robot controller using the established communication mechanism.

A5.3.3.6 Execute on the Physical Robot:

Check the completeness and accuracy of the transferred job file, and if verified to be correct, proceed to execute the job code on the physical robot in the real environment while utilizing the test-mode of robot operation. Ensure to take all necessary precautions to ensure a safe and controlled execution.

A5.3.3.7 Monitor and Validate in Real-time:

- Configure the previously integrated Digital Shadow to offer live monitoring and visualization functions of the physical robot’s sensor data, status, and trajectory within the Unity environment. This enhancement allows users to track the robot’s movements and respond to real-time feedback, ensuring better control and safety during robot operation.

- Monitor and validate the real-time execution of the uploaded job code file to ensure that the physical robot’s behavior aligns with the virtual simulation.

- Identify and address any discrepancies or issues as they arise.

A5.3.3.8 Refine and Optimize:

- Refine and optimize the robot job code based on the real-world execution results.

- Iteratively fine-tune and improve the robot’s behavior in both the virtual and physical spaces.

By following this workflow, the RWC Digital Twin becomes functionally completed, enabling efficient and secure transfer of robot job code. This facilitates seamless bidirectional integration between the virtual and physical spaces, providing a reliable platform for safe and effective robot Programming by Demonstration, experimentation, and training.

Input

Fully functional XR System with integrated Building Bricks A1 to A4, XR scene created, XR interactions created, XR System tested, debagged and optimized, specifications for manufacturing DT building, tech docs, and similar for used manufacturing HW and SW

Output

Manufacturing DT system (including Digital Shadow) fully operational (functional mechanisms for bidirectional data and information exchange between physical and virtual spaces) and ready for deployment, including dedicated SW for real-time data acquisition for system/operator-behaviour recording, playback and operating physical system in real-time or off-line.

Control

Detailed assessment of the integration process by checking achieved functional performances, debugging, and additional settings on both physical hardware and software, and the virtual model software and hardware environment.

Resource

Technical documentation, user guides, instructions, SW development tools, Open Architecture Control system of manufacturing hardware, network and multiprocess communication tools, dedicated tools for programming and setting up of manufacturing equipment control systems and cell controller, dedicated simulation SW and acquired data processing and job code generation.