A4 - UI Creation

It is recommanded to sketch the user interface behavior like a story board to specify the initial vision. Then, Human-machine interaction design requires that:

- The scene is properly constructed, with clear definition of actors, ie: potential handled objects

- Actions on actors are clearly identified (A use case model may be drawn)

- Actors behaviors is specified. It can be a very basic reflex action, or a more complex behavior, developed inside a complex script. It may involve more or less complex simulations

Three main basic actions are used :

- Selection: how to select an actor ?

- Navigation: how to move inside the scene (change my point of view) ?

- Manipulation: how do I act on a actor ?

These actions are not independent: we need to select an actor to manipulate it. We can select an actor for navigation (for example for teleportation). But this decomposition means that every human-machine specification abut interaction can be described by these three operations.

Then the technical procedure to select, navigate or manipulate depends on a device selection plus mapping of device sensors/actuators with selection, navigation, manipulation metaphors.

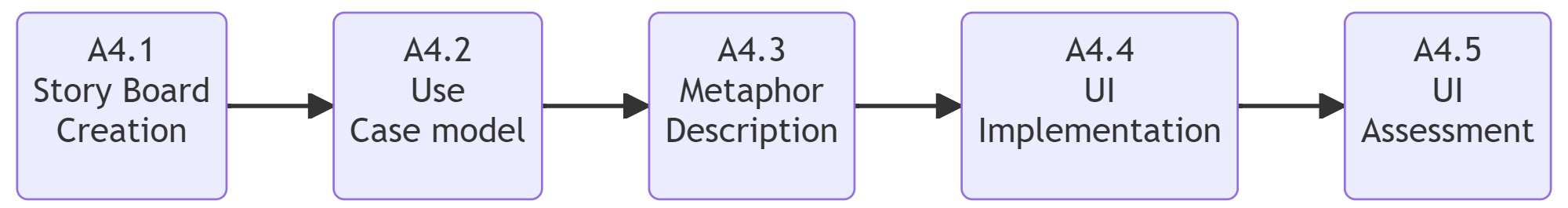

Workflow Structure

| INPUTS: Scene Actors |  | OUTPUTS: UI for XR environment |

UI Creation workflow

Workflow Building-Blocks

| Activities | Overview |

|---|---|

| A4.1 Story Board Creation | • Description: The storyboard will present the main expected views and highlight what is expected. • Input: User interface goals. • Output: Sketches and metaphors. • Control: Knowledge about the device and visualization capacities. • Resource: Drawing tools, information sources. |

| A4.2 Create a Use Case model (UML-like description) | • Description: The use case will create a link between the actors of the virtual scene and human operators. Decompose use case functions into Selection/Navigation/Manipulation keywords (see Bowman or Poupyrev!). Pay attention to the number of degrees of freedom expected for each action. The use case usually highlights actors and it is good practice to define information sources as specific actors. Then the use case model enables checking that end user functions are completely defined and that they can access the expected information. • Input: Scene Actors. • Output: Functions (extend, inherits). • Control: User interaction specification. • Resource: UML Framework. |

| A4.3 Metaphor Description | • Description: List the various states that can be reached by an actor. An actor is a metaphor for rendering an object in the scene. The actor is governed by parameters and states. Define the rendering rules which link a (state, parameters) into visual, auditive, haptic (feedbacks). Among actor parameters, we can have position, orientation, colors, textures, or special effects for visual rendering, but we can also add haptic (force, torque parameters), and also audio effects. • Input: Scene Actors, Rendering system. • Output: Parameterized scene with controls (we call it the set of metaphors). • Control: Rendering system capacity. • Resource: List of objects/assets available. |

| A4.4 Implementation | • Description: Depending on the rendering development environment (Unity3D, Unreal, Babylon.js), there are plugins to connect VR/AR/Rendering/Interaction devices. Implement mapping: in a given state, acting on a device button in a given condition changes actor parameters. This mapping is usually scripted in a specific computer science language (C# in Unity 3D, C++, Python or BluePrint in Unreal, Python in Godo, Python in Blender, etc.). To complete this activity, check the Interaction section. • Input: Metaphors, Rendering system. • Output: New scripts and compiled system. • Control: Expected mapping. • Resource: Script libraries. |

| A4.5 UI Assessment | • Description: Several tests should be conducted - Function tests, to check that scripts and mapping are properly implemented. - Integration tests, to check that the overall interface is working fine. Here, some basic scenarios can be tested, but also some kind of monkey tests with quite random actions. - Cognitive and ergonomic tests. Even if we always specify the user interface trying to minimize ergonomic difficulties and cognitive load, this may be checked through experiences with final users and adequate measures and questionnaires: 1. Bio-sensor measures. 2. Task performance (time to realize a given task, number of errors.) 3. Questionnaires: - NASA-TLX: workload - SUS: System Usability Scale. • Input: Executable framework. • Output: Evaluation grid, re-design and correction specifications. • Control: Initial performance specification. • Resource: Sensors, questionnaires, etc. |